FIRE-RES Geo-Catch: a mobile application to support reliable fuel mapping at a pan-European scale

iForest - Biogeosciences and Forestry, Volume 16, Issue 5, Pages 268-273 (2023)

doi: https://doi.org/10.3832/ifor4376-016

Published: Oct 19, 2023 - Copyright © 2023 SISEF

Technical Advances

Abstract

We present a browser-based App for smartphones that is freely available to end-users for collecting geotagged and oriented photos depicting vegetation biomass and fuel characteristics. Our solution builds on advantages of smartphones, allowing their use as easy sensors to collect data by imaging forest ecosystems. The strength and innovation of the proposed solution is based on the following points: (i) using a low memory footprint App, streaming images and data with as little data-volume and memory as needed; (ii) using JavaScript APIs that can be launched from both a browser or as an installed App, as it applies features such as service workers and Progressive Web App; (iii) storing both image and survey data (geolocation and sensor orientation) internally to the device on an indexed database, and synchronizing the data to a cloud-based server when the smartphone is online and when all other safety tests have been successfully passed. The goal is to achieve properly positioned and oriented photos that can be used as training and testing data for future estimation of the surface fuel types based on automatic segmentation and classification via Machine Learning and Deep Learning.

Keywords

Mobile Data Collection, Citizen Science, Smartphone Position and Orientation, Wildfire, Image Analysis, Progressive Web Apps

Introduction

Forest fires are a complex phenomenon driven by different biological, physical, and human factors, at least in southern Europe, experiencing around 85% of the annual burned area on the continent ([6]). In 2022, the burned area increased to 837.000 ha, with countries like Romania, France, and Portugal joining Spain and Italy as the biggest contributors to the burned areas within the EU27 ([17]). Interactions between extreme climate conditions, vegetation, and human behaviour are the most critical and important factors driving forest fires ([5], [4]) not only in southern Europe, but recently in northern and western Europe. Fire activity is also influenced by other variables, such as topography, stand and forest fuel characteristics, size and compartmentalization of forests, land ownership fragmentation, landscape vegetation, management practices, land use changes, rural exodus, and urban expansion ([21], [3]). These variables are mingled in various combinations and influence wildfire risk. Humans through their activities and behaviour affect mostly when and where fires are ignited and how they eventually propagate. In Europe, human activities accounted for more than 95% of ignitions ([10]). Therefore, if new fire prevention-oriented tools could be created and effectively used, they could potentially contribute to the reduction of people caused ignitions and relieve some of the burden that fire management agencies must carry each fire season (less ignitions, less fatigue, better and timely first response).

Several such initiatives have been developed by the forest services, civil protection agencies, academia, and industry, focusing on the importance of effectively mapping fuel types across extended landscapes. The lack of updated and accurate fuel maps for almost all European countries (with the exception of some southern countries) has serious implications for how fire risk is estimated, as it makes it impossible to conduct fire behaviour simulations or run daily fire hazard predictions using similar methods as those applied in the latest version of NFDRS or Wildfire Analyst which is currently implemented in numerous fire agencies.

A concerted project named LANDFIRE, aimed to determine fuel types at the country scale was started many years ago by the Forest Service of the United States using the vegetation fuel groups developed by Anderson ([1]) and Scott & Burgan ([18]); recently, an adaptation to the European landscapes was carried out by Aragoneses et al. ([2]). However, mapping fuel types at larger scales inherently leads to uncertainty in the results, mostly due to the lack of ground data to train the models. The production of a reliable and fine-scale pan-European fuel map requires a rigorous process for validating spatial outputs produced with remote sensing, geoprocessing, Machine Learning (ML), and Deep Learning (DL) techniques with ground-obtained data (i.e., location, photographs, and fuel characterization). Keane & Dickinson ([9]) and Tinkham et al. ([20]) suggested a photoload sampling technique to support similar surface fuel mapping.

Mapping vegetation characteristics, such as fuel types, canopy base height, or biomass, is a daunting task due to the environmental complexity and the features of interest to be identified and distinguished in each landscape. Vegetation can show very different vertical and horizontal spatial characteristics. Grasslands, shrublands, and forests with short to very tall trees can have a vertical structure with high spatial complexity. The latest advancements in remote sensing, from satellites to drones and portable sensors, have remarkable improved our capability to map vegetation parameters; vegetation height and biomass can be estimated by combining remote sensing products with predicting models that use different categories of sophisticated algorithms based on ML and DL. Nevertheless, it is well known that such predictions may suffer from potential errors which can be quite significant, especially when the training process is fed with inaccurate data, the number of training samples is limited, or the attribute to be predicted is not representative of the real-world variability. A solution to the abovementioned issue is to conduct field inventories using mobile-based surveying approaches to support data collection that are necessary for all three steps of AI classification, i.e., training, testing, and validation.

So far, traditional remote sensing products used for fuel mapping in forested lands have been constrained by sensor inability to penetrate the canopy and identify understory features, thus limiting their capacity to capture fuel loads and the distribution of surface fuels ([8]). This limitation is often solved by generating a set of rules considering the type and state of tree vegetation and/or on-site environmental characteristics ([16]). Regardless of the methodology applied, surface fuel classification is based on general rules or variables obtained through statistical models that, while based on empirical data, tend to aggregate predictions around means, missing the opportunity to account for the variability existing in the real world and requiring expert-based adjustments ([12]). This calls for a specific and broad-scale inventory of the main fuel types, especially forest fuels.

Mobile applications for forestry use ([7], [13], [19]) and for wildfire purposes already exist. A concrete example in wildfire purposes is the WFA Pocket ([14]), which implements the classical fire spread models and has been used by thousands of users worldwide. However, to the best of our knowledge, there are not many mobile applications widely used to collect field data and characterize fuel types. The FIRE-RES project aims to integrate fire management approaches through a user-friendly and intituitive mobile application which can be used by non-experts to collect fuel data. Therefore, the main objective of this work is to develop a mobile application to collect oriented and geolocated images in a quick, simple, and reliable manner, thereby collecting user-acquired ground truth data with consistency across large areas and capturing the full range of European fuel types and landscapes. The collected images will then be interpreted by experts using an online viewer and standard guides to translate the images into fuel types. To make the process more rigorous, each image will be interpreted by more than one expert. This redundancy of interpretations will allow for checks on consensus among experts. If an image has different interpretations, a consensus is reached through further interaction among experts. This process will provide a robust way to validate the pan-European fuel map we are planning to generate during our follow-up research.

Materials and methods

Overview: the FIRE-RES Geo-Catch App and viewer

The FIRE-RES Geo-Catch framework consists of an App for mobile phones (FIRE-RES Geo-Catch App, hereafter “App”) and a web-GIS portal (FIRE-RES Geo-Catch Viewer, hereafter “web-GIS” or “viewer”), both available online via common browsers. The software was developed as a lightweight and easy-to-use application for a smartphone. By design, it relies on well-supported application programming interfaces (APIs) to collect and share low-memory-footprint online data. The following sections describe the components of the whole framework, including the App, viewer, and storage repositories.

App user interface

The first icon row, respectively from left to right, displays (i) a background light/dark toggle button, (ii) a link button to a manual page that provides detailed information about the App, and (iii) a log button. The latter opens a log-space with a detailed list of messages that provides useful feedback when the App is tested internally and is improved only among FIRE-RES partners.

The second row has a single large button (with a camera icon) that opens the video-stream connecting to the device camera. The last button provides a list of available projects to be chosen by the user, to which their field collected data will be assigned. This option is mandatory, as each captured image must be connected to a project, thus giving greater credibility and reachability to specific data stored on the server; this is useful for meta-analysis, as system administrators can choose at will from which project they can extract the uploaded information. Another mandatory step that must be taken upon the first usage of the App is user validation, described in the next section.

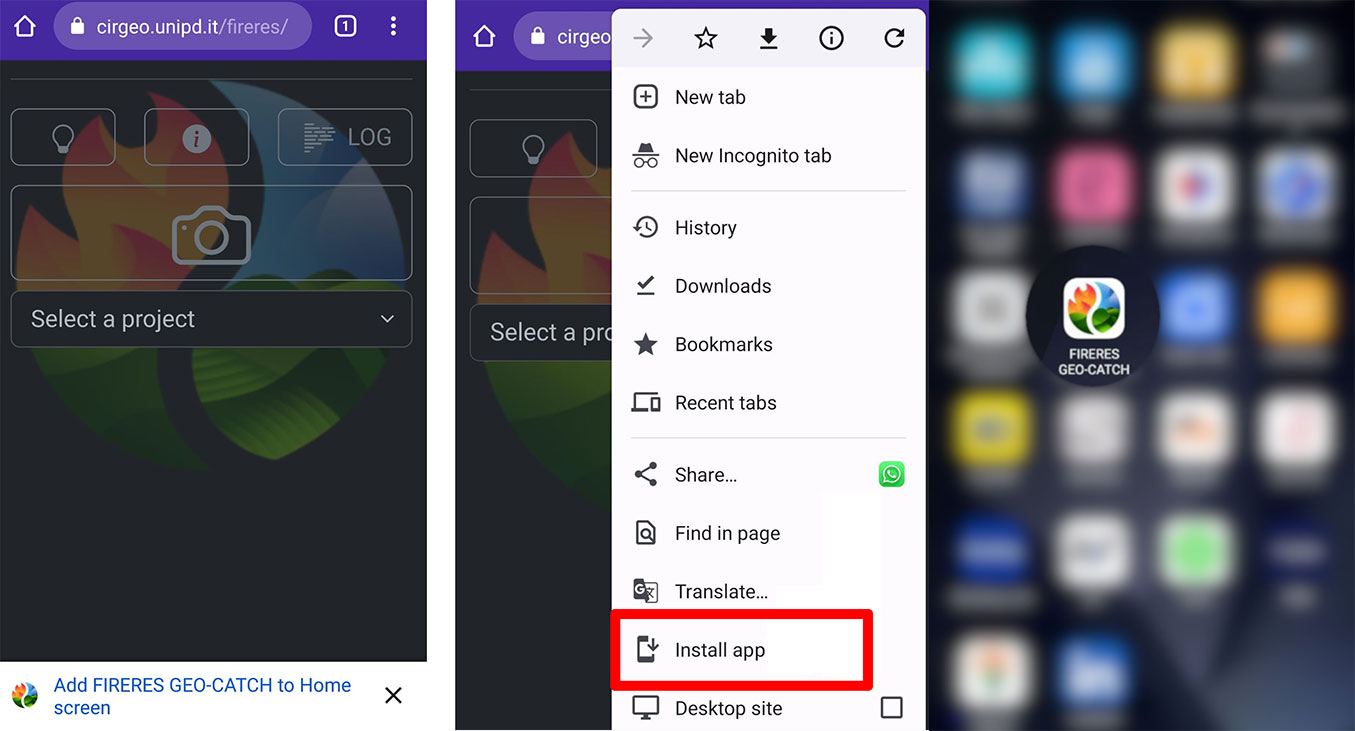

The interface (Fig. 1) keeps a dark background for battery efficiency. Users can change this option by clicking the first icon on the top left of the interface. The importance of this function is not for aesthetic-related reasons but because the App will be used in the field and the light background is a better choice for some outdoor conditions (e.g., strong sunlight).

Fig. 1 - The main App interface. (Left): upon first access from the browser, the user can display a tutorial in the icon “i”; (Center): the user can install the App and prompted to validate an email address; (Right): the user can display of the icon of the App among other mobile applications on the smartphone.

User validation

To avoid abuse of the App, a white-list approach is used. Photos are loaded and stored on the server only if the user is validated through a verified email address by simply clicking the provided link. To ensure privacy, the email is not stored anywhere but is only used to validate a user’s unique ID (UID), a 10-character alphanumeric string that is persistently assigned to the user’s phone and browser. The same phone will get different UIDs if accessing the App from different browsers on the same device (e.g., Chrome™, Mozilla Firefox, etc.). The users will also get a secret token that they can use to delete their images stored on the server. This validation approach keeps full privacy for the user while preventing anonymous, unwanted, or unauthorized use of the App, e.g., uploading random images or trying to use the uploading capabilities for intrusion in the server.

Collecting geotagged and oriented images

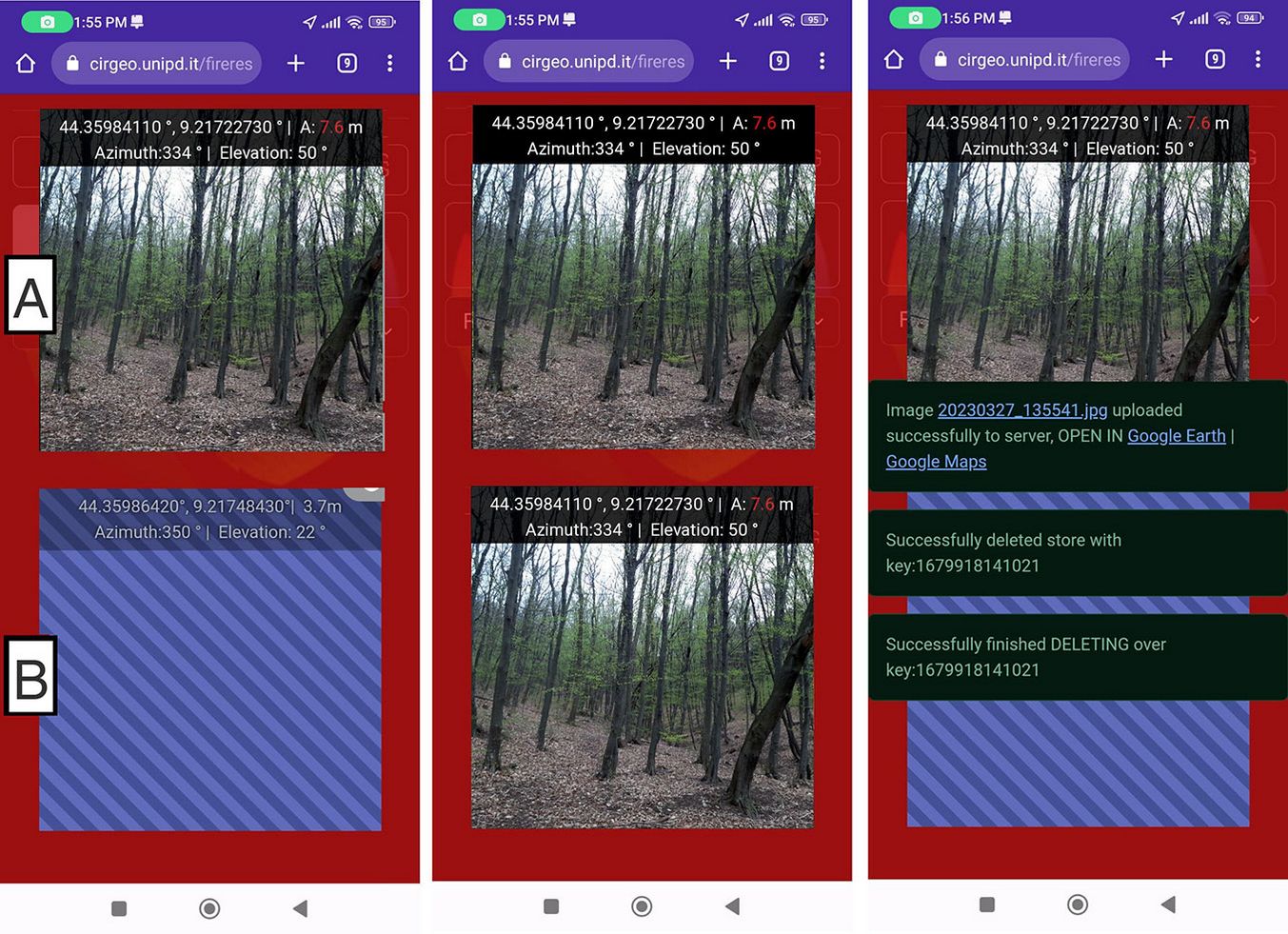

When the user taps the photo icon, the interface for capturing images is activated (Fig. 2). The interface consists of two square areas over a red background (Fig. 2 - left, area A and area B). One square has the video stream from the camera (Fig. 2 - left, area A), overlaid at its top part with coordinates, accuracy, and horizontal and vertical angles in degrees. The accuracy is directly obtained from the APIs, with a 95% confidence level. When the users are satisfied with the image and have checked the geolocation accuracy, they can tap on the video stream square, triggering a snapshot of the video frame and showing it as an image in the bottom square (Fig. 2 - left, area B), along with its coordinates, accuracy, and orientation values (Fig. 2 - central panel). After the user has taken the photos, the Geo-Catch App will double-check that they are accurately geotagged and will inform the user if the camera is not enabled to read and save the location in images. Up to this point, the image is not yet stored, but this step is necessary for double-checking and evaluating the captured image (photo quality and accuracy). Upon approval, the user can tap the captured image (Fig. 2 - left, area B) to finalize it and finally save on the smartphone.

When the device is online, both photo and geolocation information are uploaded to the Interdepartmental Research Center of Geomatics (CIRGEO) server, which belongs to the University of Padua, Italy. If an internet connection is not available, the data package (image with accompanying information) will be cached and synchronized the next time that the FIRE-RES Geo-Catch App detects an internet connection. Users will not lose cached images if the App is shut down, since images captured while offline are stored until synchronized with the server. The local stored copy will be deleted only when the server has successfully received the uploaded image.

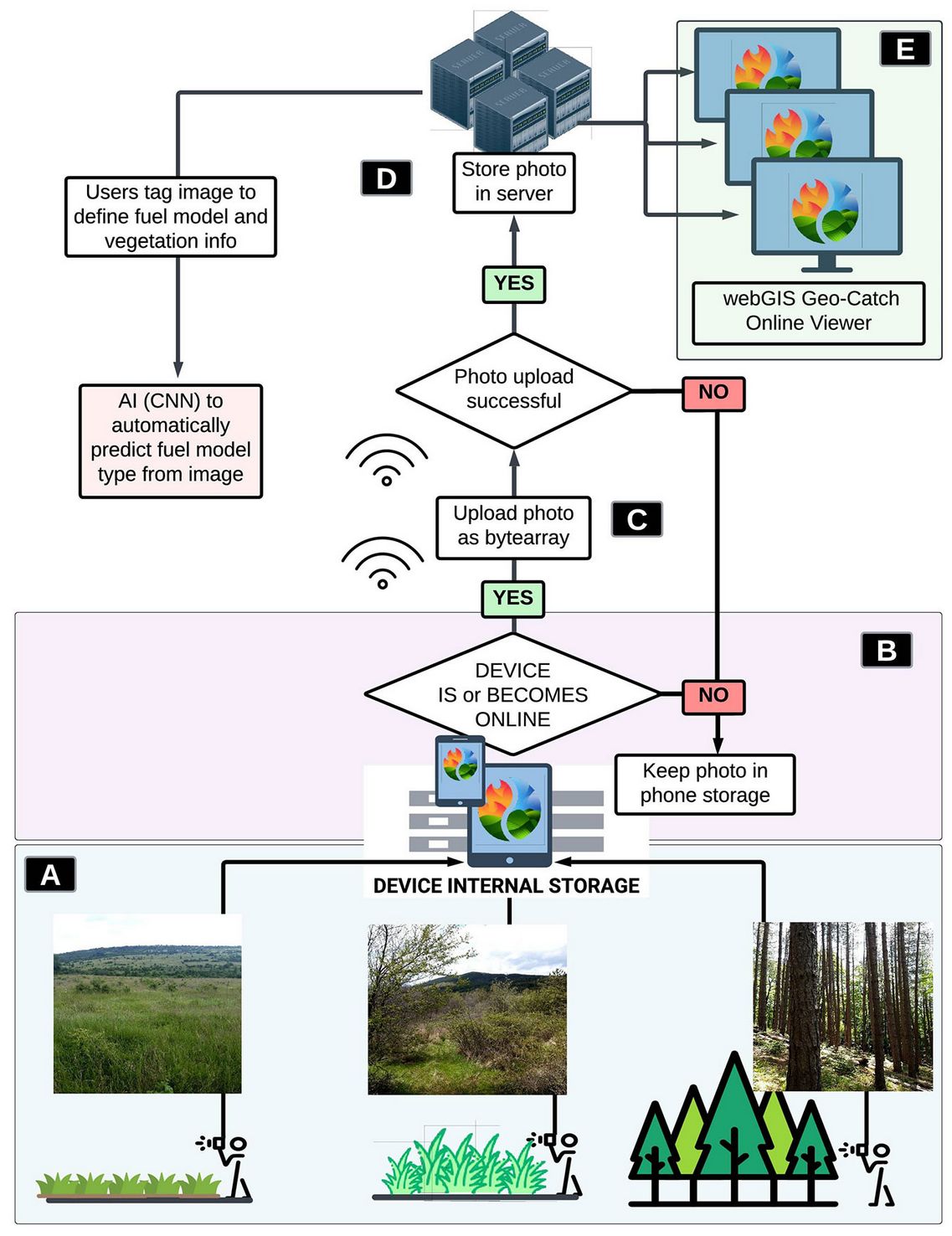

Technical details on the workflow are shown in Fig. 3and described below, with user actions in italics:

Fig. 3 - Framework of the workflow from the field to collected and stored geotagged and oriented images.

- “the user in the field opens the App” from the device browser or directly from the installed App icon, and this opens the video stream interface (Fig. 3A).

- “The user tap the video stream box” (Fig. 2 - left, square A). This action triggers the following: (2.1) the image is temporarily saved in the device memory as a JPEG compressed format with 90% compression and shown in the bottom square (Fig. 2 - middle, square B); (2.2) the following additional information is stored as an ASCII string in the EXIF tag “ImageDescription” directly in the JPEG image memory blob: (a) project name; (b) geolocation: longitude and latitude; (c) orientation: horizontal and vertical Euler angles; (d) timestamp in POSIX time: milliseconds from 1° January 1970; (e) horizontal spatial accuracy: in meters; (f) user ID. An example of the information ASCII string below: FIRELinks|9.2174032|44.3598822|358|86|1679734943295|3.2|q7p1w0a1nk.

- “The user tap the captured image” (Fig. 2 - middle, square B). The tagged image is stored internally on the smartphone using the IndexedDB JavaScript API. The unique key used in the database is the timestamp (refer to point 2.2d above), the value is the image blob, and the information is the ASCII string presented above.

- One of two possible automatic actions follows (Fig. 3B). (a) The device is online, which triggers the uploading of the image to the server. If the upload is successful, then the dataset is deleted from the IndexedDB of the device. (b) The device is offline: nothing happens; the image is kept in the internal device database via the IndexedDB JavaScript API.

If event (4b) is true, when the device becomes online later, (4a) is activated. This action is referred to as a “lazy” strategy of synchronizing data. In other words, the action is triggered without the user having to wait or check for the proper upload. If no upload occurs due to the smartphone being offline, the data is safely stored on the user’s device.

Framework

The proposed App can also work completely offline, thanks to its Progressive Web App (PWA) framework. The PWA framework enables web-Apps to work as smartphone Apps without the requirement for the standard Android or iOS frameworks to be installed (i.e., Google Play™, Apple Store™). This has the advantage of working as a simple web-App, thus accessible via a web browser, or as a standalone application.

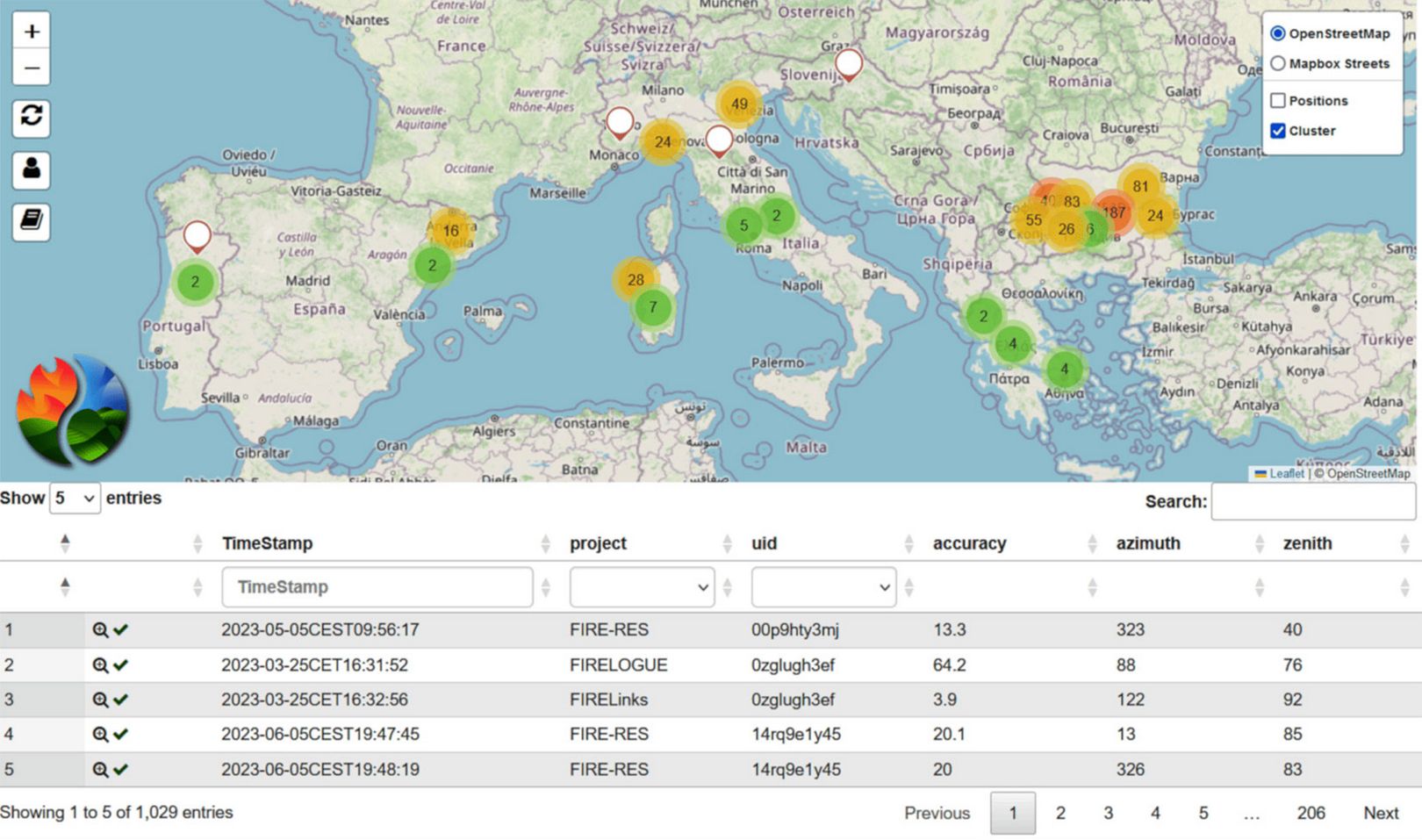

The App’s activities and interface (Fig. 3B) are built on HTML-JavaScript as the client-side environment and PHP as the server-side environment. The latter collects the raw image data as a byte-array blob and decodes it for storing the image in a file-based structure that is stored on the server. A specific web-GIS portal, FIRE-RES Geo-Catch Viewer, processes all information stored on the server (Fig. 4), thus decoding the EXIF from all images, tagging users to each image, and allowing users to view each photo with its associated data. A potential advantage of this approach is that every image keeps all information, making it completely transferable to other software that might want to process the image using information in the EXIF tag “ImageDescription”.

Fig. 4 - Geo-Catch Viewer is the online portal to access via web-GIS the stored geotagged and oriented images. A current overview of the data collected through the living labs of the FIRE-RES project.

From photos to fuel types

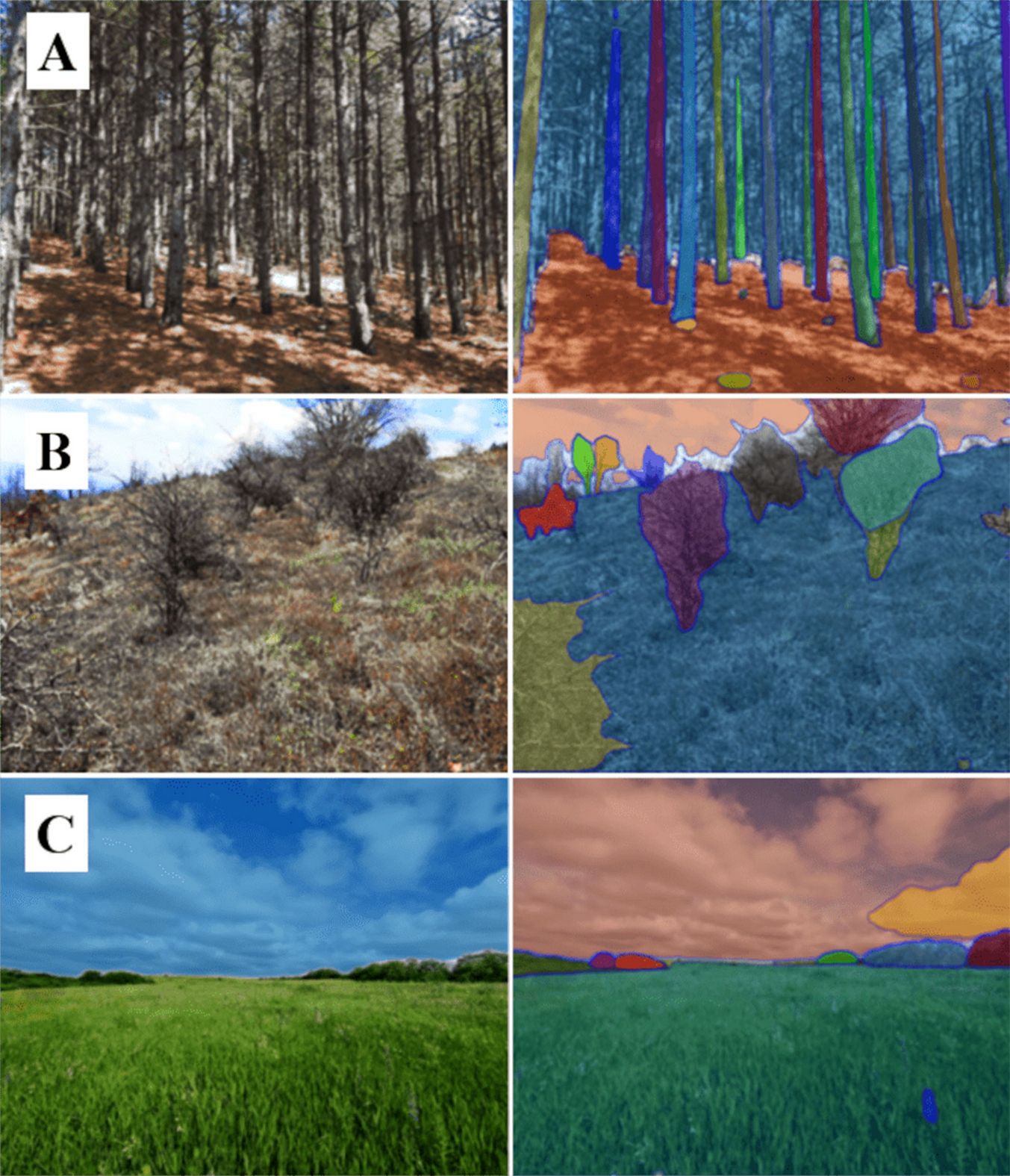

Our purpose and follow-up plan include the assignment on the acquired photos by experts of a fuel model type that will subsequently be used to train, test, and validate an AI algorithm. This pattern will be provided to a wide range of forestry and wildfire research end-user in the scientific community, enabling them to predict the fuel model from the collected images automatically. Labels will be given by experts who can interpret and assign the correct fuel model type. In order to achieve an objective evaluation, each image will be assessed by two or more different experts, thus cross-validating each interpretation. If all independent experts assign the same fuel model type, then the agreed type will be selected directly; otherwise, a consensus will be reached through interaction among experts. These actions can be quickly deployed via the FIRE-RES Geo-Catch Viewer, which provides the necessary tools for experts to view and label the images directly online and then interact to provide consensus when necessary. Labelled images can be used for training and validation of an AI model that will extract vegetation features from the scene’s background and further classify them into fuel model types. Fig. 5shows the first application of an automatic segmentation process using the method proposed by Kirillov et al. ([11]) in different main fuel types.

Fig. 5 - Example of a segmented phone image using the method of Kirillov et al. ([11]) through main fuel types (A - Forest, B - Shrubland, and C - Grassland).

Location and orientation accuracy

Recommended image accuracy in terms of geo-position and orientation is related to the targeted scale on several projects. In the FIRE-RES project, we target to validate a map where the cell spatial resolution is 100 × 100 m and the horizontal geolocation accuracy is 10 m (90th percentile Circular Error - CE90), i.e., a minimum of 90 percent of horizontal errors fall within the stated CE90 value. Smartphone’s geolocation accuracy, even under forest canopies, is far below this tolerance threshold, and therefore correction of gross errors is required. It must be noted that this implies filtering out gross errors (outliers). Testing and validation of smartphone expected accuracies have already been conducted in previous research in an urban forest condition ([15]), finding CE95 < 5 m.

Regarding orientation accuracy, rapid tests have been done by orienting the smartphone perpendicular to regular building facades and comparing the direction of horizontal angles using existing cartography. Results showed that the readings fell within ± 20°, but for some of them, an offset of 180° was noticed. This is probably due to the smartphone’s internal interpretation of its orientation regarding the axis of the internal compass. However, additional studies are planned to further investigate this matter.

Conclusions

A reliable fuel map is a useful dataset to support the assessment of fire effects and mitigate the negative consequences of wildfires through early warning systems, fire risk estimation, and monitoring in real time the state of forests susceptibility to wildfires. Fuel map classifications are well structured hierarchically and well tested for fire simulations. The FIRE-RES Geo Catch mobile application can contribute to provide more robust and accurate fuel maps at a pan-European scale, improving the accuracy of fuel type classification by using obtained field inventory data. People involved in acquiring geolocated imagery to support fuel map through ground control points will also contribute during validation phases by providing specialized knowledge. The geolocated images need to be well distributed across all over Europe, and our target is to obtain them in a collaborative manner involving forest services, civil protection agencies, research centres, academia, and private companies. Involving the public in general could also be an option, thus taking advantage of more regular data acquisitions. In addition, the databank that will be created through this in-situ crowdsourcing of images can be used for other purposes. Applicability will be further increased if the images are taken recurrently for the same areas during different time periods. Finally, this tool can be used to assess forest status by identifying main natural and anthropogenic alterations, capturing changes not only in fuel types but also in the state and condition of a forest, whether phenological or due to natural or human impacts, identifying the main drivers of forest change, that are not necessarily always driven by fire events.

Acknowledgements

This mobile application was funded by the European Union’s Horizon 2020 Research and Innovation Programme, by the project entitled “Innovative technologies & socio-ecological-economic solutions for fire resilient territories in Europe - FIRE-RES”, grant agreement no. 101037419. We would like to thank each of our colleagues who anonymously participated during the testing phase of the App, which has been very helpful in improving and making the App stable and operational.

References

Gscholar

CrossRef | Gscholar

CrossRef | Gscholar

CrossRef | Gscholar

Gscholar

Authors’ Info

Authors’ Affiliation

Francesco Pirotti 0000-0002-4796-6406

Department of Land, Environment, Agriculture and Forestry - TESAF, University of Padova, v. dell’Università 16, 35020 Legnaro, PD (Italy)

Antoni Trasobares 0000-0002-8123-9405

Forest Science and Technology Centre of Catalonia - CTFC, Carretera de Sant Llorenç de Morunys, Km 2, 25280 Solsona (Spain)

Sergio de-Miguel 0000-0002-9738-0657

Joint Research Unit CTFC - AGROTECNIO - CERCA, Carretera de Sant Llorenç de Morunys, Km 2, 25280 Solsona (Spain)

Department of Agricultural and Forest Sciences and Engineering, University of Lleida, 25198 Lleida (Spain)

CoLAB ForestWISE - Collaborative Laboratory for Integrated Forest & Fire Management, Quinta de Prados, 5001-801 Vila Real (Portugal)

Forest Research Centre, School of Agriculture, University of Lisbon, Tapada de Ajuda, 1349-017 Lisbon (Portugal)

Space Research and Technology Institute, Bulgarian Academy of Sciences, str. “Acad. Georgy Bonchey” bl. 1, 1113 Sofia (Bulgaria)

Department of Forestry and Natural Environment Management, Agricultural University of Athens, 36100 Karpenisi (Greece)

Consiglio Nazionale delle Ricerche - CNR, Istituto per la BioEconomia, v. Madonna del Piano 10, 50019 Sesto Fiorentino, FI (Italy)

Interdepartmental Research Center of Geomatics - CIRGEO, University of Padova, v. dell’Università 16, 35020 Legnaro, PD (Italy)

Corresponding author

Paper Info

Citation

Kutchartt E, González-Olabarria JR, Trasobares A, de-Miguel S, Cardil A, Botequim B, Vassilev V, Palaiologou P, Rogai M, Pirotti F (2023). FIRE-RES Geo-Catch: a mobile application to support reliable fuel mapping at a pan-European scale. iForest 16: 268-273. - doi: 10.3832/ifor4376-016

Academic Editor

Marco Borghetti

Paper history

Received: May 09, 2023

Accepted: Jul 10, 2023

First online: Oct 19, 2023

Publication Date: Oct 31, 2023

Publication Time: 3.37 months

Copyright Information

© SISEF - The Italian Society of Silviculture and Forest Ecology 2023

Open Access

This article is distributed under the terms of the Creative Commons Attribution-Non Commercial 4.0 International (https://creativecommons.org/licenses/by-nc/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Web Metrics

Breakdown by View Type

Article Usage

Total Article Views: 19432

(from publication date up to now)

Breakdown by View Type

HTML Page Views: 14647

Abstract Page Views: 2919

PDF Downloads: 1595

Citation/Reference Downloads: 5

XML Downloads: 266

Web Metrics

Days since publication: 841

Overall contacts: 19432

Avg. contacts per week: 161.74

Article Citations

Article citations are based on data periodically collected from the Clarivate Web of Science web site

(last update: Mar 2025)

(No citations were found up to date. Please come back later)

Publication Metrics

by Dimensions ©

Articles citing this article

List of the papers citing this article based on CrossRef Cited-by.

Related Contents

iForest Similar Articles

Review Papers

Remote sensing-supported vegetation parameters for regional climate models: a brief review

vol. 3, pp. 98-101 (online: 15 July 2010)

Review Papers

Accuracy of determining specific parameters of the urban forest using remote sensing

vol. 12, pp. 498-510 (online: 02 December 2019)

Review Papers

Remote sensing of selective logging in tropical forests: current state and future directions

vol. 13, pp. 286-300 (online: 10 July 2020)

Technical Reports

Detecting tree water deficit by very low altitude remote sensing

vol. 10, pp. 215-219 (online: 11 February 2017)

Technical Reports

Remote sensing of american maple in alluvial forests: a case study in an island complex of the Loire valley (France)

vol. 13, pp. 409-416 (online: 16 September 2020)

Research Articles

Afforestation monitoring through automatic analysis of 36-years Landsat Best Available Composites

vol. 15, pp. 220-228 (online: 12 July 2022)

Research Articles

Assessing water quality by remote sensing in small lakes: the case study of Monticchio lakes in southern Italy

vol. 2, pp. 154-161 (online: 30 July 2009)

Research Articles

Evaluation of hydrological and erosive effects at the basin scale in relation to the severity of forest fires

vol. 12, pp. 427-434 (online: 01 September 2019)

Review Papers

Remote sensing support for post fire forest management

vol. 1, pp. 6-12 (online: 28 February 2008)

Research Articles

Classification of xeric scrub forest species using machine learning and optical and LiDAR drone data capture

vol. 18, pp. 357-365 (online: 07 December 2025)

iForest Database Search

Google Scholar Search

Citing Articles

Search By Author

- E Kutchartt

- JR González-Olabarria

- A Trasobares

- S de-Miguel

- A Cardil

- B Botequim

- V Vassilev

- P Palaiologou

- M Rogai

- F Pirotti

Search By Keywords