Assessing the scientific productivity of Italian forest researchers using the Web of Science, SCOPUS and SCIMAGO databases

iForest - Biogeosciences and Forestry, Volume 5, Issue 3, Pages 101-107 (2012)

doi: https://doi.org/10.3832/ifor0613-005

Published: May 30, 2012 - Copyright © 2012 SISEF

Short Communications

Abstract

For long time a quantitative assessment of the productivity of Italian researchers has been lacking; the first and unique assessment was the Three-Year Research Evaluation for the period 2001-2003. Italian Law 240/2010, ruling the organization of research and universities, requires a system for the evaluation of the scientific productivity of Italian researchers. In 2011, both the National Agency for the Evaluation of Universities and Research Institutes (ANVUR) and the National University Council (CUN) proposed a set of evaluation criteria based on a bibliometric approach with indexes calculated using the information from the Thomson Reuters Web of Science (WOS) or the Elsevier SciVerse SCOPUS databases. The aim of this study is twofold: (i) to present the results of an assessment of the global aggregated scientific productivity of the Italian forestry community for 1996-2010 using the SCOPUS data available from the on-line SCIMAGO system; and (ii) to compare the WOS and SCOPUS databases with respect to three indexes (number of publications, number of citations, h-index) of the scientific productivity for university forest researchers in Italy. Two subcategories of forestry were considered: AGR05 - forest management and silviculture, and AGR06 - wood technology. Out of a total of 84 authors, 76 were considered in the analysis because not affected by unresolved homonymity or duplication. Overall, the trend in scientific productivity for Italian forestry is promising. Italy ranked 10th in terms of the h-index with an increasing trend in importance relative to other European countries, though the scientific contribution of authors was largely heterogeneous. Both WOS and SCOPUS databases were suitable sources of information for evaluating the scientific productivity of Italian authors. Although the two databases did not produce meaningful differences for any of the three indexes, the advantages and disadvantages of the two sources must be carefully considered if used operationally to evaluate the Italian scientific productivity.

Keywords

Scientific Evaluation, SCOPUS, Web of Science, Google Scholar, SCIMAGO

Introduction

The quantitative evaluation of researchers’ activity is based on the principle that scientific productivity is related to the degree to which the results of investigations are published. Research performance is a multidimensional concept influenced by the ability of researchers to accomplish multiple tasks, including publishing, teaching, fund-raising, public relations, participation to meetings and conferences, and administrative duties ([31]). In this context, publishing capacity must be seen not only as a task for researchers but also as an indicator of research performance.

Evaluation of researcher productivity follows two main approaches: (i) peer-review, which entails researchers submitting their products to panels of appointed experts who conduct evaluations; and (ii) bibliometric, which entails calculation of indexes based on numbers of publications and citations. The latter indexes may also be used to inform peer-review evaluations. The literature debating the pros and cons of both approaches is vast. Abramo & D’Angelo ([2]) provide a recent overview contrasting the peer-review and the bibliometric systems for national research assessments.

In Italy, research evaluation has been “strongly neglected” in the past ([1]). The first and only assessment in Italy was the Three-Year Research Evaluation ([38]) that appraised the productivity for 2001-2003 using a peer-review approach. The recent Law 240/2010 from the Italian Ministry for Research and University (MIUR, Ministero dell’Istruzione, Università e Ricerca) requires development of a system for assessing the scientific productivity of researchers in Italian universities and other public research organizations. Both the National Agency for the Evaluation of Universities and Research Institutes (ANVUR, Agenzia Nazionale per la Valutazione del Sistema Universitario e della Ricerca) and the National University Council (CUN, Consiglio Nazionale Universitario) proposed bibliometric methods and a set of criteria to this purpose. This new evaluation system will serve as the basis for assessing the qualifications of candidates for new research positions in universities and other research institutions and for annual budget allocations from MIUR to universities and research institutes.

The CUN ([11]) announced multiple recommendations specifically addressing different thematic areas of the Italian research system. For the agriculture and veterinary area, one of the proposed main criteria was the number of scientific publications in journals included in the Thomson Reuters Web of Science (WOS) and/or in the Elsevier SciVerse SCOPUS. The ANVUR ([5]) and CUN ([11]) proposed adoption of several criteria based on the number of publications, number of citations, and the h-index calculated using the SCOPUS or WOS databases. Both CUN ([11]) and ANVUR ([5]) focus more on the criteria to be used in the evaluations than on the databases which they consider as equivalent.

Citation databases

Citation databases, also called bibliographic databases, are used to combine information related to bibliographic productivity and to facilitate the identification of authors of publications and sources of publication citations. A large number of thematic citation databases are available, but their coverage is limited to specific academic or scientific areas. Other databases are more general and have been constructed to cover the overall academic productivity. Web of Science, SCOPUS and Google Scholar are the most well-known databases, and all three are used in the forestry sector.

Garfield ([14]) constructed the first citation database for combining information on publications and associated citations for a defined set of scientific journals. Nowadays, Thomson Reuters, which continues the work initiated by Garfield ([15]), has developed a citation database known as the “Web of Science” (WOS). WOS actually consists of seven different databases, of which five are for citations from journals (⇒ http://www.thomsonreuters.com/). The Science Citation Index Expanded covers 6550 peer-reviewed scientific journals; the Social Sciences Citation Index covers 1950 social science journals; the Arts & Humanities Citation Index covers 1160 journals in the literature, arts and humanities domain; and the Conference Proceedings Citation Index and the Social Sciences & Humanities Conference Proceedings Index cover conference proceedings.

For long time WOS was the only citation database available. In 2005, Elsevier made available SCOPUS which currently is included in a hub system of on-line services called SciVerse (⇒ http://www.hub.sciverse.com/). The SciVerse SCOPUS system covers approximately 18 500 titles of which 17 500 are peer-reviewed journals.

Recently, a scientific consortium led by the Consejo Superior de Investigaciones Científicas (CSIC) in Spain released an on-line enhancement of the SciVerse SCOPUS database through the SCIMAGO system ([17]). SCIMAGO is an on-line, publicly accessible, interactive system that calculates multiple aggregated statistics based on the SCOPUS database.

Google Scholar (⇒ http://scholar.google.com/) is a web search engine developed by Google Inc. providing free access to the world’s scholarly literature. Google Scholar has been available in beta version since November 2004 and searches records from commercial, non-profit, institutional and individual bibliographic databases.

The use of citation databases

Citation databases have multiple bibliometric uses. Historically, the most common applications have been calculation of the scientific relevance of scholarly journals and evaluation of researcher productivity. In recent decades a very large number of indicators have been proposed for both applications ([23]).

Researchers have argued both for and against the use of bibliometrics for assessing research quality and researcher productivity ([28]). Proponents have reported the validity and reliability of citation indexes in research assessments, as well as the positive correlation between publications/citation counts and the results of peer review evaluations ([6], [16], [20], [27], [32], [35], [37]). On the other hand, critics claim that the validity of citation indexes is limited by the assumption that a scientist’s relevance is expressed solely by the number of papers published or the number of citations received ([26], [34]).

Since SCOPUS and Google Scholar began competing with Thomson Reuters, a large number of comparisons of bibliometric indexes calculated on the basis of the three citation databases have been published. Although a comprehensive review is beyond the scope of this study, a few examples are noted. Bar-Ilan ([7]) compared the h-index for a set of highly-cited Israeli researchers, and the citations received by one specific book ([8]). Garcia-Perez ([13]) worked with the h-index in psychology; Li et al. ([24]) evaluated the potential of the bibliometric tool SciFinder for analyzing medical journal citations; Meho & Yang ([28]) worked with 25 library and information science faculty members; and Mikki ([29]) analysed 29 authors, mainly in the area of climate and petroleum geology at the University of Bergen. Jacsó ([21]) used different citation databases to test and compare indexes for citations for one specific author, citations from one or several specific papers and citations from one specific journal. Bauer & Bakkalbasi ([10]) compared citation counts for articles from the Journal of the American Society for Information Science and Technology published in 1985 and in 2000. Bakkalbasi et al. ([9]) compared citation counts for articles from two disciplines, oncology and condensed matter physics, for 1993 and 2003.

The results of these comparative studies reveal three important and key findings. First, although the three sources - WOS, SCOPUS and Google Scholar - have different goals and contents, they all track citations that are potentially useful for bibliometric studies ([29]). WOS is still considered the main bibliographic source of information, but data retrieved from SCOPUS and Google Scholar, in addition to WOS, produce more accurate and comprehensive assessments of the scholarly impact of authors ([28]). Second, the considerable overlap between WOS and SCOPUS varies depending on the period of interest and the scientific area. In terms of content, the overlap between Google Scholar and each of WOS and SCOPUS varies even more than the overlap between WOS and SCOPUS. Google Scholar stands out in its coverage of conference proceedings as well as international, non-English language journals, and its greater coverage includes some items that are not found in the other databases ([4]). On the other hand, Google Scholar’s lack of quality control limits its use as a bibliometric tool because of non-scholarly sources, erroneous citation data and errors of omission and commission when using search features ([22], [29]). The authors of the previously cited reviews and comparative studies tend to agree that the search results from Google Scholar are very “noisy” and therefore require considerable difficult and time-consuming filtering to obtain usable information, especially for evaluation purposes. Third, Bar-Ilan ([7]) showed that the rankings of scientists based on the three different citation databases are highly correlated, varying between 0.884 and 0.780 in terms of the Spearman index ([36]). However, the use of the different citation databases may significantly alter the relative ranking of scientists with mid-range rankings ([6], [28]).

Aim of this study

The main aim of this study is to carry out a quantitative assessment of the effects of the WOS and SCOPUS citation databases on the calculation of bibliometric indexes. The assessment focuses on evaluating differences and similarities and discussing the pros and cons of the two systems. The aim is not to conduct a formal, official scientific appraise. The particular objectives of this report are twofold: (i) to present the results of an assessment of the global aggregated scientific productivity of the Italian forestry community for 1996-2010 using the SCOPUS data available from the SCIMAGO system; and (ii) to compare the WOS and SCOPUS databases with respect to three indexes of scientific productivity for university forestry researchers in Italy. The three indexes - number of publications, number of citations, and h-index - were calculated for all researchers (full professors, associate professors, assistant professors for the Italian ordinari, associati and ricercatori, respectively); assistant professors with non-permanent positions were also considered. Two subcategories of forestry were considered from all the universities in Italy: AGR05, forest management and silviculture, and AGR06, wood technology.

Materials and methods

Based on the results of the bibliographic review and in consideration of the guidelines proposed by both ANVUR ([5]) and CUN ([11]), this study was restricted to a comparison of the WOS and SCOPUS databases.

The first two bibliographic indexes considered are straightforward. First, number of publications is simply the number of scientific papers published by a given author. Only the journals covered by the WOS and SCOPUS citation databases were considered. Neither the position along the authorship nor the relevance of the journals were taken in consideration. Second, number of citations is the number of times papers written by an author is cited by other papers. As previously, the relevance of the journal from which the citation comes is not considered. Self-citations, defined as citations from papers authored or co-authored by author in question, may either be included or excluded.

The third index is the Hirsch ([19]) or h-index. This index was specifically designed to evaluate the performance of researchers. The h-index is the number of articles (N) by a given author cited at least N times, and it is expressed as an integer value. The meaning of the h-index can be easily understood by constructing a graph for which the X-axis has all papers by the author ranked from most cited to least cited, and for which the Y-axis is the number of citations for each paper. The point at which the 1:1 line intersects the line connecting the dots representing citations corresponds to the h-index value. The h-index can also be calculated in aggregate for teams of researchers, research centers, working groups, universities, thematic areas, regions or countries. In addition, the h-index can be calculated with or without self-citations.

The first part of the paper focuses on a global comparison of the aggregated scientific productivity in forestry using the SCIMAGO system ([33]) that is populated with data from the SCOPUS database for the years 1996 to 2010. For each available country and year, the total number of scientific papers published and the number of citations (excluding self-citations) were queried from SCIMAGO. Data for European countries with the greatest scientific productivity (France, Germany, Italy, Spain, United Kingdom) are compared with data for China. China was included in the analysis because its increasing trend in scientific productivity is frequently considered as a reference for scientometric analyzes. The United States of America (USA) was not included in the analyzes because the large number of scientific products tends to mask the presentation of trends for European countries ([25]). The relative importance of a country is calculated as the ratio between the number of publications or the number of citations for each country and the total number of publications or the number of citations respectively for all considered countries. Temporal trends are analyzed with general linear regressions and the statistical significance of coefficient of correlations were determined by the t test.

The second part of the paper focuses on evaluation of the two citation databases with respect to similarities and differences for the indexes of scientific productivity for individual Italian university researchers in forestry. The list of assistant, associate, and full professors was constructed using the on-line database from the MIUR for AGR05, silviculture and forest management, and AGR06, wood technology ([30]). Bibliographic data were acquired from the two citation databases: the Thomson Reuters Web of Science (version 4.10, ⇒ http://apps.isiknowledge.com/ - considered time span: 1996-2010) and SciVerse SCOPUS from Elsevier (⇒ http://www.scopus.com/ - considered time span: 1996-2010). The records accessed from both the databases are those available for a connection granted by the University of Molise.

Based on information for the selected authors from the two databases, the following indexes were calculated: total number of papers published, total number of citations and total number of citations excluding self-citations for both WOS and SCOPUS, and the h-index and the h-index excluding self-citations for SCOPUS only. Both the SCOPUS and WOS databases are affected by possible homonymy and duplications. In the case of homonymy, the two databases may incorrectly assign papers and citations when two authors have the same family name and the same first letter of the given name. For these cases, either augmented or diminished numbers of publications or citations may be assigned to an author. In the case of duplication, the same author may have multiple identities in the database as a result of publishing with different affiliations or in different fields. For these cases, a diminished number of publications or citations is usually assigned to the author ([18]).

For the purposes of this paper, homonymies and duplications were tentatively resolved based on the scientific area or the affiliation of the authors. Authors for whom homonymies and duplications could not be resolved were excluded from the analysis. After resolving issues related to homonymies and duplications, means of number of publications, number of citations excluding self-citations, and h-index for the selected authors were calculated for the two citation databases. Differences in the means for each index were tested for statistical significance using the Wilcoxon’s signed ranks test ([39]). In addition, using the methodology proposed by González-Pereira et al. ([17]), correlations between the values of the three indexes for two databases were also calculated using the Spearman’s correlation coefficient ([36]).

Results

Global data

At the global level, the cumulative scientific productivity for forestry for 1996-2010 is 0.55% of the total in terms of articles published and 0.63% in terms of citations. For Italy, the values are smaller, 0.33% and 0.36%, respectively. The three disciplines in Italy with the greatest scientific productivity are clinical medicine, biomedical research and chemistry. Universities produced more than two-thirds of the total national research product in terms of scientific papers. However, hospitals and health research organizations had the greatest number of citations ([1]).

In forestry, the USA has long been in first place with respect to both number of publications (2891 in 2010) and number of citations (536 excluding self citations in 2010). In 2007, China ranked second with respect to number of publications (746 documents), displacing Canada (646 documents) which historically held that position. With respect to citations, Canada, France, Germany and United Kingdom have all been ranked second, depending on the year.

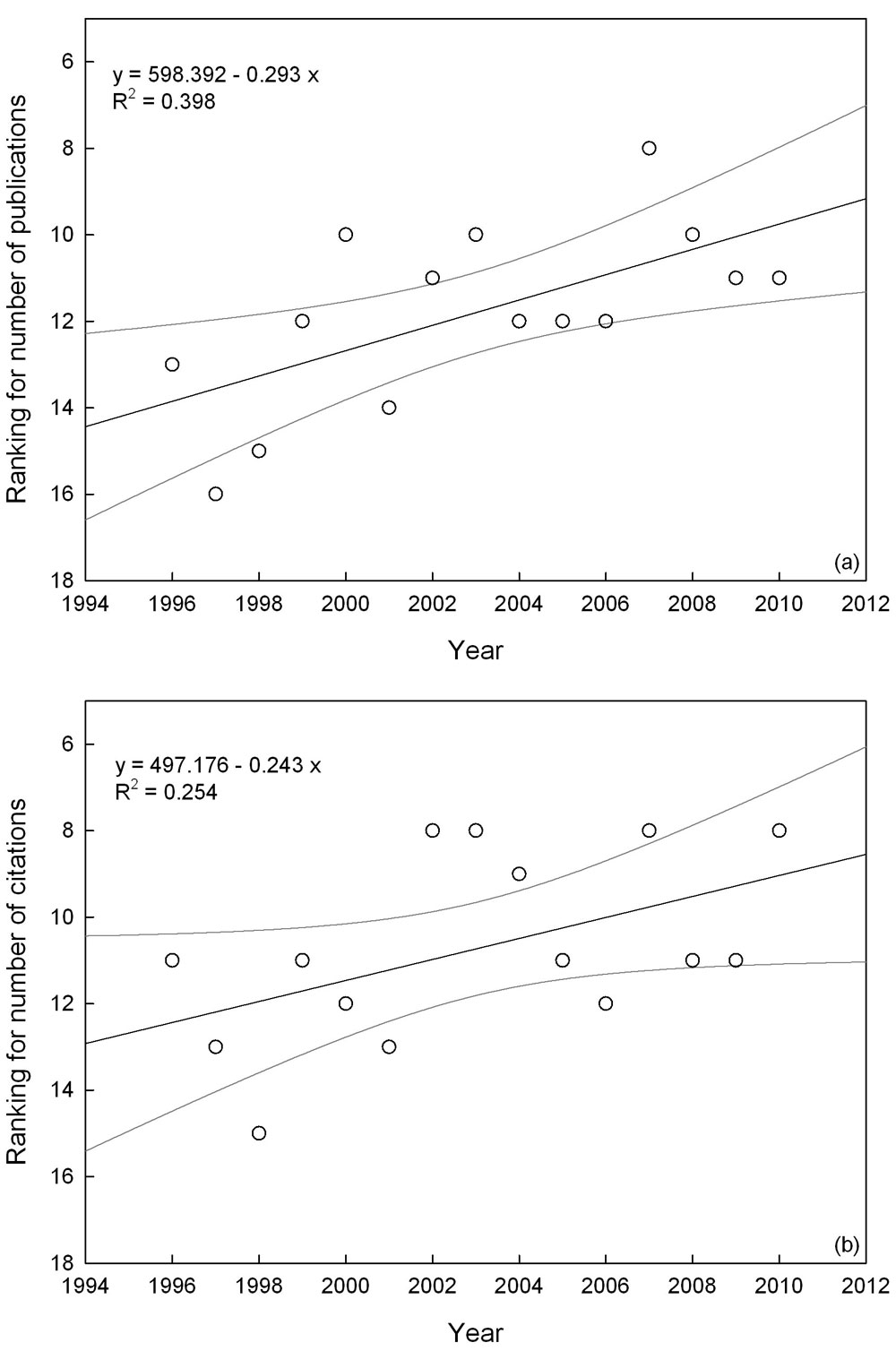

For all scientific areas at global level for 1996-2010, Italy ranked 8th with respect to number of publications, 11th as for number of citations and 7th with respect to the h-index. These rankings remained constant for the years considered. For forestry over the same period, Italy was ranked 12th with respect to number of publications (2396 documents), 11th as for number of citations (22 865 excluding self-citations) and 10th with respect to the h-index (1050). The relative annual rankings revealed an increasing trend with respect to both number of published papers (t = 2.929, p<0.05) and number of citations (t = 2.583, p<0.05 - Fig. 1).

Fig. 1 - Trend of rank position of Italy at global level in the scientific area “forestry” on the basis of the total number of publications produced (a) and citations obtained (b). Dotted lines are the 95% confidence intervals of linear trend regressions. Data source: SCIMAGO database.

On annual basis, the number of scientific papers published by Italian forest researchers in this period increased from 62 papers in 1996 to 285 in 2010 (the maximum was 305 in 2007).

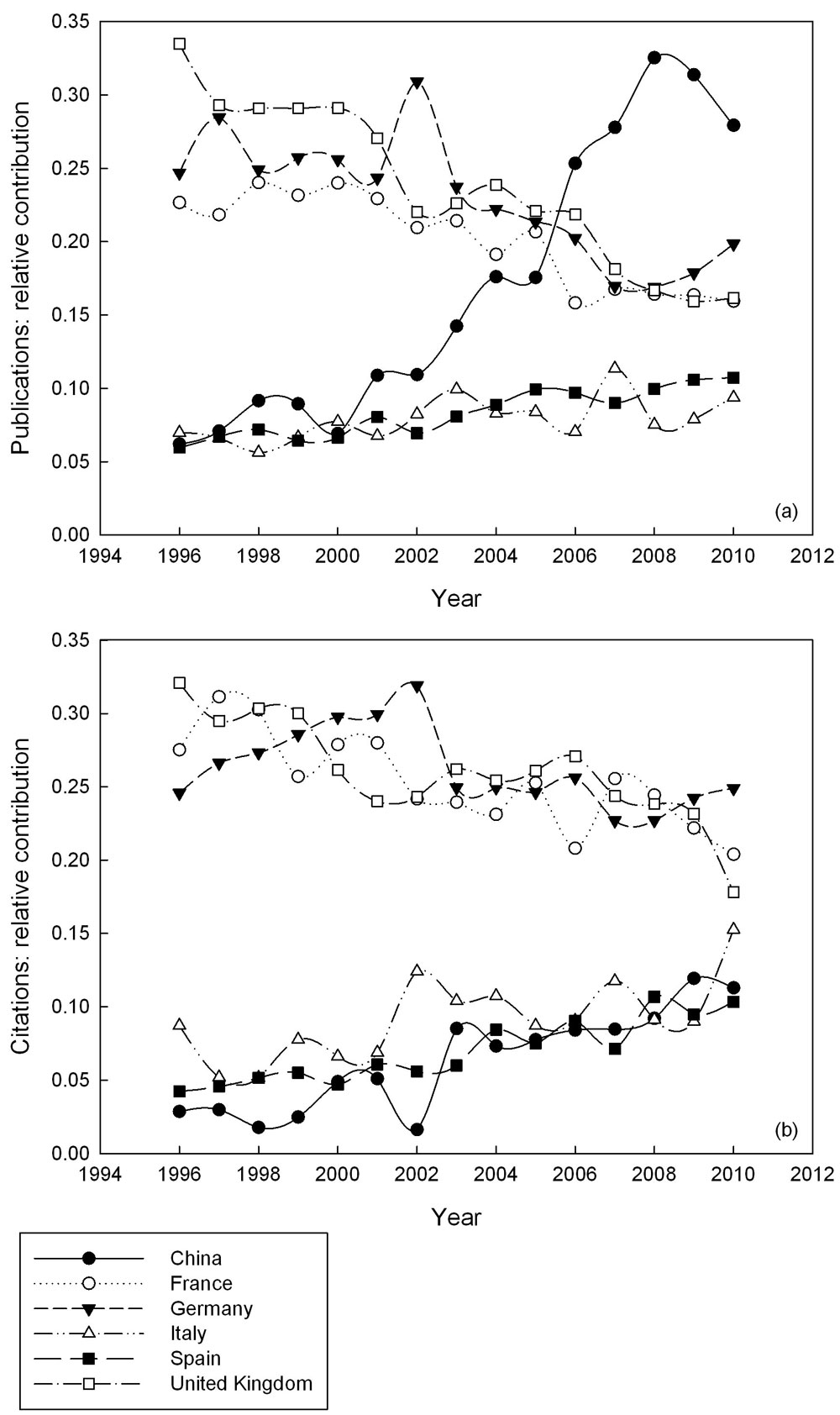

Relative trends for France, Germany and United Kingdom (which traditionally publish the most forestry papers among European countries) were negative: linear trends in documents relative contribution with t values of the coefficients of correlation of 7.256 (p<0.001), 4.655 (p<0.001), and 13.905 (p<0.001), respectively; and in relative contribution for citations with t of 5.023 (p<0.001), 2.182 (p<0.05), and 5.481 (p<0.001) respectively; while trends for China, Spain and Italy were positive: linear trends for documents relative contribution with t of 10.416 (p<0.001), 10.204 (p<0.001) and 2.611 (p<0.05), respectively; and in relative contribution for citations with t of 7.801 (p<0.001), 8.617 (p<0.001) and 3.222 (p<0.01), respectively (Fig. 2).

Fig. 2 - Trends in the relative contribution in the publication of scientific papers (a) and in number of citations excluding self-citations (b) from 1996 to 2010 in forestry. Data source: SCIMAGO database.

In 2010, the overall percentage of citations to Italian papers was almost the same as for the United Kingdom (15% vs. 18%, respectively).

National data

The national analyzes were conducted for 32 assistant professors (six with non-permanent positions), 32 associate professors and 20 full professors from 15 different universities. Out of the total of 84 authors, 40 were affected by homonymy and/or duplication in one or both the databases, 39 in WOS and 17 in SCOPUS. For eight authors, homonymy was not resolved, therefore they were excluded from the analysis. Of the eight exclusions (2 full professors, 4 associate professors, 2 assistant professors) two resulted from unresolved homonymy in SCOPUS but not in WOS, while six resulted from unresolved homonymy in WOS but not in SCOPUS.

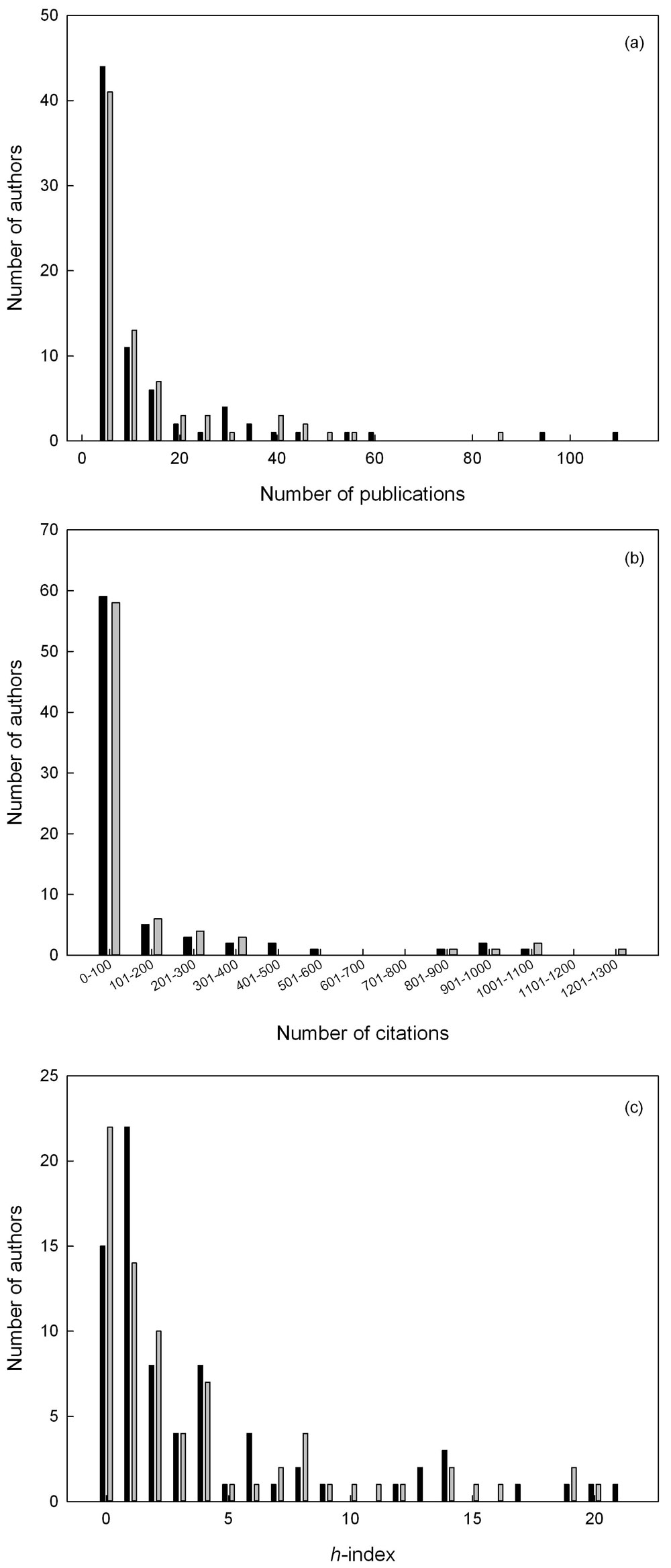

For the remaining 76 authors on the basis of the records available, the number of publications per author from WOS ranged from 0 to 114, whereas from SCOPUS the range was 0 to 89 (Fig. 3a). The total number of citations per author, including self-citations, from WOS ranged from 0 to 1663 (1057 excluding self-citations), whereas from SCOPUS the range was 0 to 1879 (1278 excluding self-citations - Fig. 3b). On average, including self-citations, each paper produced 6.84 citations from WOS and 7.25 from SCOPUS; the averages excluding self-citations were 5.75 and 5.72, respectively. h-indexes from WOS ranged between 0 and 21 and from SCOPUS ranged between 0 and 20 (Fig. 3c).

Fig. 3 - Frequency distributions of the 76 authors considered in this study as for the number of publications (a), citations excluding self-citations (b) and h-index (c), on the basis of WOS (black) and SCOPUS (grey) databases.

These descriptive statistical parameters are here presented to support the comparison between the WOS and SCOPUS databases only. These values are calculated on the records available trough the connection granted by the University of Molise which may be incomplete since it may not cover all the journals used by the considered authors for publication. Furthermore, these values are referred to the 76 considered authors only, they cannot be extrapolated to represent the entire population of the 84 authors of the forestry university sector in Italy.

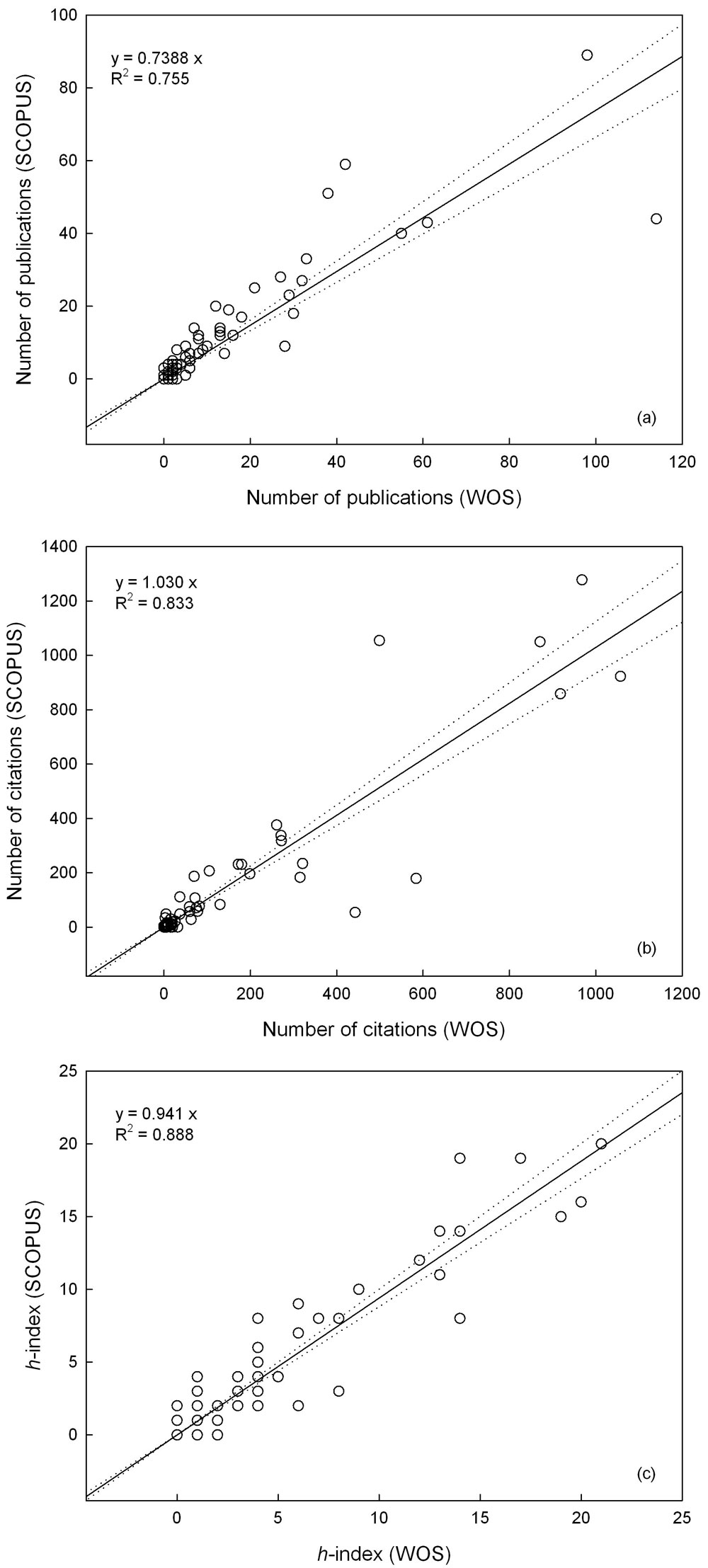

The three Wilcoxon’s signed ranks tests ([39]) showed that average values for the three indexes calculated using WOS and SCOPUS for the 76 authors were not significantly different from each other (number of publications: Z = 0.463, p = 0.071; number of citations excluding self-citations: Z = 0.254, p = 0.799; h-index: Z = 0.861, p = 0.389). Values of the three indexes calculated from WOS and SCOPUS for the 76 authors were highly correlated (p<0.001 level of confidence): R2 = 0.755 for number of publications (Fig. 4a), R2 = 0.833 for citations excluding self-citations (Fig. 4b), and R2 = 0.888 for the h-index (Fig. 4c).

Fig. 4 - Correlation with 95% confidence intervals between the values of the three indicators: number of publications (a), number of citations excluding self-citations (b), h-index (c) calculated for the 76 considered authors on the basis of WOS and SCOPUS databases. Please note that the h-index is expressed in integer values, for this reason several dots in the correlation graph (c) overlap each other, apparently resulting in a number of cases lower than 76.

Discussion and conclusions

Infometrics in the last years developed consistently also as a result of the introduction of two new citation databases: SCOPUS and Google Scholar ([7]). SCOPUS demonstrated to be a valid alternative to WOS, they both can be considered true scholarly resources useful for scientific evaluation. The use of Google Scholar is instead recommended for searching non-traditional forms of publishing ([10]).

Quantitative scientific productivity in forestry, when compared to overall scientific productivity, both at global and Italian levels, is relatively marginal. On the basis of the SCIMAGO database, approximately 0.5% of publications and citations were from the forestry sector. This percentage is probably underestimated because forestry researchers also publish in other subject categories (such as remote sensing, statistics or biology). Papers published in these other thematic areas may not be included under the forestry category in SCOPUS and thus not in SCIMAGO.

Italian scientific productivity in forestry has been increasing for the last 15 years and is now ranked approximately 10th at international level with respect to all three indexes, number of papers published, number of citations and the h-index.

This promising trend for Italian forestry is just part of a more general positive trend for the entire Italian research system. Darario & Moed ([12]) recently reported that analyzes using WOS showed that Italian researchers are more productive than researchers in any other European country. However, because the number of researchers is smaller, the number of publications per inhabitant in Italy is also smaller when compared to the other European countries considered in their study (France, Germany, Netherlands, Spain, Switzerland, United Kingdom).

The positive trend for Italian forestry is at least partially due to an internationalization phenomenon attributable to the positive effects of globalization. In forestry, international cooperation has consistently increased between 1996 and 2010. In this period, the number of papers published by an Italian author cooperating with at least one author affiliated to a foreign institution increased from 30% to more than 43%.

For the 76 authors, mean number of publications, mean number of citations and h-index values calculated by WOS and SCOPUS databases were not statistically different. These results confirm the comparability between indexes calculated from the two databases ([7]). WOS has a more complete and wider coverage for the 76 authors than SCOPUS (868 vs. 761 papers, respectively). This result confirms that WOS is still the leading citation database. However, SCOPUS had more complete citation coverage with 8851 citations, excluding self-citations, vs. 8459 for WOS. One limitation of WOS is that self-citations cannot be automatically excluded when calculating the h-index.

This study confirmed the large effects of homonymy and author duplications for both databases. Gurney et al. ([18]) recently reviewed multiple methods for resolving author ambiguity issues including automated computer-based algorithms, sociological and linguistic approaches. WOS and SCOPUS use different methods based on unique author identification codes for circumventing the problem. In addition, both databases request that authors check that the systems correctly assign the authorship.

Both WOS and SCOPUS reveal that the productivity of individual Italian forestry academic authors is quite heterogeneous. Part of this heterogeneity may be attributed to differences in age and number of years in positions. As a result, official research evaluations are usually limited to a specific time frame. Considering their overall career, the 10 most productive authors from the WOS database published 69% of the papers and received 73% of the citations excluding self-citations; from SCOPUS, the 10 most productive authors published 59% of the papers and received 70% of the citations. The comparatively large global forestry scientific productivity for Italian researchers is thus attributed to a relatively small number of very productive authors; in contrast, the majority of authors made only limited contributions. Out of the 76 considered authors, 41 (54%) had five or fewer scientific papers documented by WOS in their career; of these, 10 are full professors. Furthermore, out of the 76 authors, 9 (12%) had no publications included in WOS.

Both WOS and SCOPUS have advantages and disadvantages that must be carefully considered for appraising author’s scientific productivity. On the basis of this experience, WOS was more complete than SCOPUS, but WOS does not currently permit the automatic exclusion of self-citations when calculating the h-index.

This study confirmed that the h-index is a robust bibliometric index producing similar values for the two databases, and may be recommended for operational performance assessments. However, the differences in citation counts between WOS and SCOPUS create a dilemma for science policy makers ([7]). To solve this problem we recommend further studies to assess the impact of the citations database selection in the performance evaluation of researchers in different subject categories.

In the future, bibliometrics will likely come to be preferred to the peer-review processes for research evaluation because it is faster, easier and cheaper, and produces more transparent results ([3]).

Acknowledgements

I’m grateful for the help and the encouragement received from Prof. Piermaria Corona (University of Tuscia, member of ANVUR expert group for the national evaluation of research quality in Italy) and Dr. Ronald E. McRoberts (Northern Research Station, U.S. Forest Service). I also acknowledge three anonymous referees who greatly contributed to the improvement of the earlier versions of this paper.

References

Gscholar

Gscholar

CrossRef | Gscholar

Gscholar

Gscholar

Gscholar

Authors’ Info

Authors’ Affiliation

ECOGEOFOR - Laboratorio di Ecologia e Geomatica Forestale, Dipartimento di SBioscenze e Territorio, Università degli Studi del Molise, c.da Fonte Lappone snc, I-86090 Pesche (Isernia - Italy)

Corresponding author

Paper Info

Citation

Chirici G (2012). Assessing the scientific productivity of Italian forest researchers using the Web of Science, SCOPUS and SCIMAGO databases. iForest 5: 101-107. - doi: 10.3832/ifor0613-005

Academic Editor

Marco Borghetti

Paper history

Received: Feb 08, 2012

Accepted: Apr 19, 2012

First online: May 30, 2012

Publication Date: Jun 29, 2012

Publication Time: 1.37 months

Copyright Information

© SISEF - The Italian Society of Silviculture and Forest Ecology 2012

Open Access

This article is distributed under the terms of the Creative Commons Attribution-Non Commercial 4.0 International (https://creativecommons.org/licenses/by-nc/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Web Metrics

Breakdown by View Type

Article Usage

Total Article Views: 49740

(from publication date up to now)

Breakdown by View Type

HTML Page Views: 41638

Abstract Page Views: 2323

PDF Downloads: 4205

Citation/Reference Downloads: 82

XML Downloads: 1492

Web Metrics

Days since publication: 4347

Overall contacts: 49740

Avg. contacts per week: 80.10

Article Citations

Article citations are based on data periodically collected from the Clarivate Web of Science web site

(last update: Feb 2023)

Total number of cites (since 2012): 20

Average cites per year: 1.67

Publication Metrics

by Dimensions ©

Articles citing this article

List of the papers citing this article based on CrossRef Cited-by.

Related Contents

iForest Similar Articles

Research Articles

Local ecological niche modelling to provide suitability maps for 27 forest tree species in edge conditions

vol. 13, pp. 230-237 (online: 19 June 2020)

Research Articles

Spatio-temporal modelling of forest monitoring data: modelling German tree defoliation data collected between 1989 and 2015 for trend estimation and survey grid examination using GAMMs

vol. 12, pp. 338-348 (online: 05 July 2019)

Short Communications

Dynamic modelling of target loads of acidifying deposition for forest ecosystems in Flanders (Belgium)

vol. 2, pp. 30-33 (online: 21 January 2009)

Research Articles

Modelling the carbon budget of intensive forest monitoring sites in Germany using the simulation model BIOME-BGC

vol. 2, pp. 7-10 (online: 21 January 2009)

Research Articles

Some refinements on species distribution models using tree-level National Forest Inventories for supporting forest management and marginal forest population detection

vol. 11, pp. 291-299 (online: 13 April 2018)

Editorials

COST Action FP0903: “Research, monitoring and modelling in the study of climate change and air pollution impacts on forest ecosystems”

vol. 4, pp. 160-161 (online: 11 August 2011)

Research Articles

Incorporating management history into forest growth modelling

vol. 4, pp. 212-217 (online: 03 November 2011)

Technical Reports

Post-fire forest management in southern Europe: a COST action for gathering and disseminating scientific knowledge

vol. 3, pp. 5-7 (online: 22 January 2010)

Research Articles

Confronting international research topics with stakeholders on multifunctional land use: the case of Inner Mongolia, China

vol. 7, pp. 403-413 (online: 19 May 2014)

Short Communications

Ozone flux modelling for risk assessment: status and research needs

vol. 2, pp. 34-37 (online: 21 January 2009)

iForest Database Search

Search By Author

Search By Keyword

Google Scholar Search

Citing Articles

Search By Author

Search By Keywords

PubMed Search

Search By Author

Search By Keyword